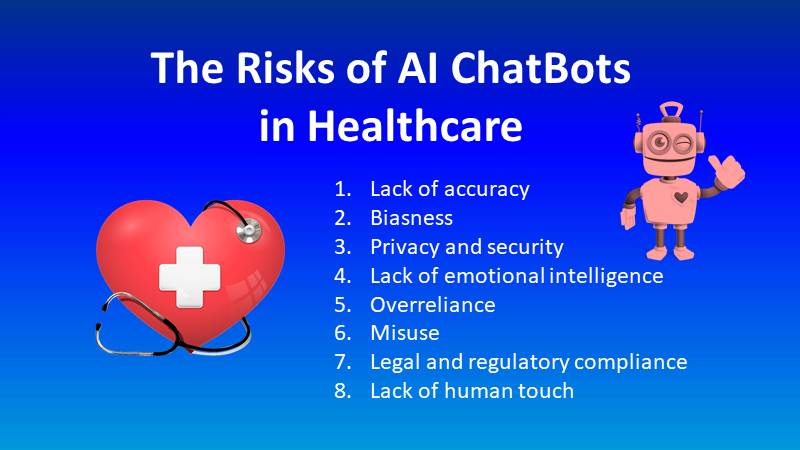

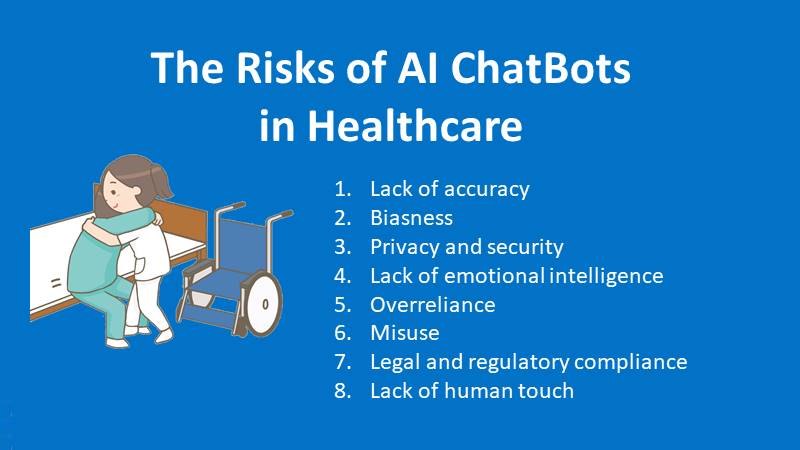

The Risks of AI ChatBots in Healthcare

There are a number of risks associated with the use of AI chatbots in healthcare, including:

- Lack of accuracy: AI chatbots may not have access to the same level of information or expertise as a human healthcare professional, which could lead to inaccurate or incomplete information being provided to patients.

- Biasness: AI chatbots may be trained on biased data, which could result in unfair or discriminatory treatment of certain groups of patients.

- Privacy and security: There is a risk that patient data may be mishandled or accessed by unauthorized parties when using AI chatbots.

- Lack of emotional intelligence: Chatbots are not capable of understanding emotions or empathy, which could make it difficult for them to provide appropriate support to patients in certain situations, such as emotional distress or complex emotional issues.

- Overreliance: Patients may rely too heavily on the chatbot and may not seek professional help when it’s needed, or delay seeking professional help.

- Misuse: Chatbots can be easily misused by patients or other individuals who may not have the best intentions, such as seeking drugs or other treatments without a proper diagnosis or prescription.

- Legal and regulatory compliance: AI chatbots must comply with various laws, regulations and guidelines, such as the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA), which can be difficult to navigate.

- Lack of human touch: Chatbots are not capable of providing the human touch that many patients need, particularly in times of distress or uncertainty.

It’s important to note that, to mitigate these risks, proper safeguards and regulations should be in place when implementing AI chatbot technology in healthcare, and the chatbot should be used in conjunction with human healthcare professionals, not as a replacement.